PhiResolution = 128 # Number of phi divisions (latitude lines) 8 9 # Convert Proc IDs to scalar values 10 p = ProcessIdScalars () # Apply the ProcessIdScalars filter to the sphere 11 12 display = Show ( p ) # Show data 13 curr_view = GetActiveView () # Retrieve current view 14 15 # Generate a colormap for Proc Id's 16 cmap = GetColorTransferFunction ( "ProcessId" ) # Generate a function based on Proc ID 17 cmap. ThetaResolution = 128 # Number of theta divisions (longitude lines) 7 s.

The following script renders a 3D sphere colored by the ID (rank) of each MPI task:ġ # para_example.py: 2 from paraview.simple import * 3 4 # Add a polygonal sphere to the 3D scene 5 s = Sphere () 6 s. The example python script is detailed below,Īnd users are highly encouraged to use this script (especially after version Module (specified with module load) and execute a python script called Makes its way through the queue, the script will launch the loaded ParaView Gpu partition on Andes, or by utilizing the GPUs on Summit. With large datasets may also find a slight increase in performance by using the Memory issues widely vary for different datasets and MPI tasks, users areĮncouraged to find the optimal amount of MPI tasks to use for their data. Submitting one of the above scripts will submit a job to the batch partitionįor five minutes using 28 MPI tasks across 1 node. On Summit the -g flag in the jsrun command must be greater This is done by using the gpu partition via #SBATCH -p gpu, while Paraview/5.9.1-egl), then you must be connected to the GPUs. If you plan on using the EGL version of the ParaView module (e.g., A Python script should then be displayed to you and can be saved. You are finished tracing the actions you want to script, click Tools→Stop Starting the trace, any time you modify properties, create filters, open files,Īnd hit Apply, etc., your actions will be translated into Python syntax. Will pop up and prompt for specific Trace settings other than the default. To start tracing from the GUI, click on Tools→Start Trace.

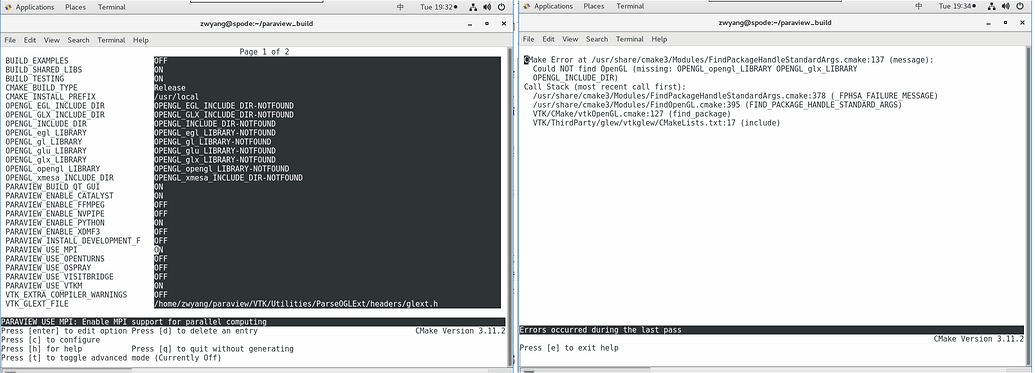

Paraview mpi how to#

See sectionĬommand Line Example for an example of how to run a Python script using (ParaView’s Python interfaces) to accomplish the same actions. Taken in ParaView, which then can be used by either PvPython or PvBatch The Trace tool creates a Python script that reflects most actions Users that repeatĪ sequence of actions in ParaView to visualize their data may find the Trace (or “trace”) interactive actions in ParaView to Python code. Each render server receives geometry from data servers in order to render a portion of the screen.One of the most convenient tools available in the GUI is the ability to convert There is a restriction that the render server cannot use more processes than the data server. The render server, too, can run in parallel under MPI if it is configured to do so. Each MPI task is told which partition, or piece of the data, it should It loads and executes the modules you select for your visualization. On Stampede2, the data server runs on the cluster.

The data server is a set of processes that communicate with MPI. It has three main logical components, a client server, responsible for the user interface, a data server, to read and process data sets to create final geometric models, and a render server, which renders that final geometry. It starts as many different processes, possibly on different machines. The ParaView application can run on a whole cluster at the same time.

0 kommentar(er)

0 kommentar(er)